I have an application that feeds periodic state into an influxdb 2 table. This data can be queried rather simply:

from(bucket: "vpp")

|> range(start: vTimeRangeStart, stop: v.timeRangeStart)

|> filter(fn: (r) => r.uuid == "${uuid}" and r._field == "State")|

|> last()

|> keep(columns: ["_time", "_value"])

This sort of works, but it causes an unsightly gap in the data. A state exists there, but it is before vTimeRangeStart so the query doesn't see it.

My flux solution to this problem is to fetch an initial value up to 24 hours prior to vStartTime. That proved to be a little tricky but Flux does provide an experimental way to manipulate times:

initialStateTimeRangeStart = experimental.subDuration(

d:24h,

from: v.timeRangeStart

)

d:24h,

from: v.timeRangeStart

)

Using this you can then get an initial state:

initial=from(bucket: "vpp")

|> range(start: initialStateTimeRangeStart, stop: v.timeRangeStart)

|> filter(fn: (r) => r.uuid == "${uuid}" and r._field == "State")

|> last()

|> keep(columns: ["_time", "_value"])

Here is the complete query fetches the initial state and appends it to the recent data:

import "experimental"

initialStateTimeRangeStart = experimental.subDuration(

d: 24h,

d: 24h,

from: v.timeRangeStart

)

)

initial=from(bucket: "vpp")

|> range(start: initialStateTimeRangeStart, stop: v.timeRangeStart)

|> filter(fn: (r) => r.uuid == "${uuid}" and r._field == "State")

|> last()

|> keep(columns: ["_time", "_value"])

recent=from(bucket: "vpp")

|> range(start: v.timeRangeStart, stop: v.timeRangeStop)

|> filter(fn: (r) => r.uuid == "${uuid}" and r._field == "State")

|> keep(columns: ["_time", "_value"])

union(tables: [initial, recent])

|> sort(columns: ["_time"])

|> yield()

|> sort(columns: ["_time"])

|> yield()

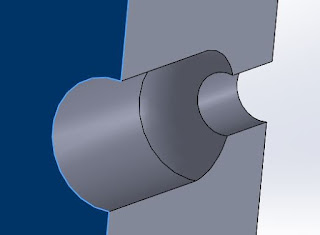

with a pleasant result that extends the previous state on to the graph:

A few things to watch out for:

- It appears to me that v.timeRangeStart is a 'time' in grafana and a 'duration' in chronograf (or influx 2). For most queries you won't notice this, as range() accepts either, but in this case, in chronograf you have to convert the time to a duration first, so the time calculation is a bit different (see below)

- The documentation for union warns you that the sort order is not necessarily maintained, and they are right. That sort() at the end is required.

- Multiple yields lets you see what's happening along the way, so feel free to inital |> yield(name: "initial") and recent |> yield(name: "recent") if it helps with debugging.

As I mentioned, in the chronograf or influx data explorer you need to modify the time offset calculation:

initialTimeRangeStart = experimental.subDuration(

d: 24h,

from: experimental.addDuration(

d: duration(v: v.timeRangeStart),

to: now()

)

)

I don't find this ideal but it's still useful to know how to do it, as developing queries in influxdb data explorer is something I find easier as it seems to give more meaningful error messages when things go haywire, and it's a little better at presenting the raw query results.

Note: I'm not sure that anybody reads any of this, I know that the search indexes all cover it, so perhaps somebody will find this useful some day. If you're that person, feel free to comment here so I know someone is listening, thanks in advance!